Toy Projects, False Confidence, and Interview Failures: What AI Students Get Wrong

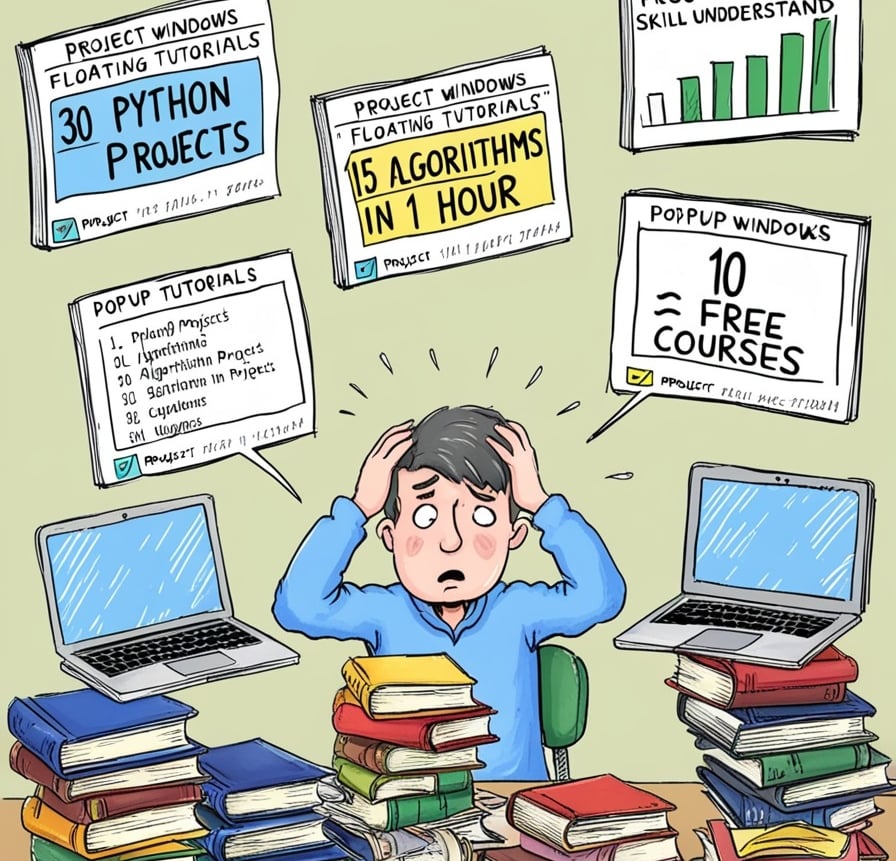

You’ve downloaded the 30 free Python project ideas. You’ve bookmarked 10 “must-do” machine learning courses. Your YouTube history is filled with tutorials on everything from NumPy tricks to GANs in 5 minutes. And yet, when someone asks you to solve a real-world problem—say, predicting employee attrition or identifying credit card fraud—you freeze.

Rakesh Arya

6/9/20254 min read

My post content

You’ve downloaded the 30 free Python project ideas. You’ve bookmarked 10 “must-do” machine learning courses. Your YouTube history is filled with tutorials on everything from NumPy tricks to GANs in 5 minutes.

And yet, when someone asks you to solve a real-world problem—say, predicting employee attrition or identifying credit card fraud—you freeze. You know the terms, but not the logic. You’ve seen the tools, but not the workflow. You’ve collected knowledge, but never built wisdom.

This is the silent struggle of today’s AI and data science learners: information overload with no digestion.

We live in a time when resources are more available than ever. But more isn’t always better. In fact, more is often the problem.

The Problem of Overconsumption

Let’s call it the AI Learning Buffet.

You’re presented with endless lists:

30 data science projects for beginners

Top 15 ML algorithms every engineer must know

10 Python tricks to become an expert

5 free courses that are “better than any degree”

And in a panic to not fall behind, students take it all. They hoard tutorials, jump between courses, and build project after project without actually understanding what they’re doing. It becomes a race—not towards mastery, but towards collecting “proof” of productivity.

Here’s what often happens next:

Shallow Learning: Students build a sentiment analysis project using a prewritten notebook. They can run the code but can’t explain why TF-IDF works better than Bag of Words.

False Confidence: They’ve seen so many models that they believe they’ve “covered it all,” but they’ve never handled missing values or class imbalance on their own.

Mental Fatigue: Constant switching and surface-skimming leads to burnout. Everything feels familiar but nothing feels clear.

In the process, the learner is just busy, not building. The information is abundant—but the insight is absent.

The Illusion of Mastery

If you’ve ever heard a student say, “I’ve done the Titanic dataset, the Iris dataset, and the Boston Housing project,” you’ll recognize the trap.

These projects are useful to begin with—but completing them does not mean you’ve mastered machine learning. What it often creates is a false sense of expertise.

Let’s break this down:

Checklist Learning: Many learners treat their journey like a task list. One project done? Tick. One algorithm coded? Tick. One course watched at 2x speed? Tick.

Buzzword Fluency: They can throw around terms like overfitting, normalization, precision-recall—but can’t explain when to use which metric, or why precision might matter more than accuracy in fraud detection.

Interview Reality Check: In a real interview, no one asks “How many courses have you done?” Instead, they ask, “What did you learn from your last project? What challenges did you face?” And that’s when the illusion shatters.

What’s worse, this superficial learning feels productive. Students genuinely believe they’re making progress. They don’t realize that skipping the "why" and "how" of a technique is what keeps them stuck at the surface.

Why Depth Beats Quantity

Let me ask you this:

Would you rather skim 20 recipes in a day or cook one dish perfectly, learning how flavors work, how ingredients react, and how to correct mistakes?

Learning AI is no different.

One well-done project—where you handle every piece from messy raw data to final deployment—is more valuable than 30 toy projects copied off GitHub.

Here’s what a single, deep project can teach you:

Problem Framing: Understanding the business need and converting it into a data problem

Data Handling: Cleaning, imputing missing values, dealing with outliers

Exploratory Data Analysis (EDA): Visualizing trends, identifying relationships, making hypotheses

Feature Engineering: Creating new variables, encoding, scaling

Modeling & Tuning: Trying different algorithms, evaluating performance, tuning hyperparameters

Interpretability: Explaining predictions using SHAP, LIME, or even plain logic

Deployment: Putting it on Streamlit or FastAPI, sharing it with the world

With depth, you learn to think. With volume, you learn to copy.

The Danger of Toy Projects

Toy projects are like training wheels—they help you get started. But here’s the problem: many students never take them off.

Toy projects are simplified problems with clean datasets, clearly defined objectives, and often pre-labeled data. Think of things like:

Classifying flowers using the Iris dataset

Predicting house prices on a perfectly prepared CSV

Analyzing Titanic survivors where the data is already neat and balanced

These projects are fine for learning syntax, exploring libraries, and getting your feet wet. But relying on them for too long gives students a false comfort zone.

Here’s what they miss out on:

Messy, incomplete data: In real-world scenarios, data is missing, unstructured, or full of noise.

Unclear objectives: No one tells you what accuracy to target or which metric to optimize.

Domain challenges: In fraud detection or healthcare prediction, stakes are high, and assumptions can be dangerous.

Communication & storytelling: Toy projects don’t teach you how to explain your findings to a non-technical stakeholder.

Most importantly, toy projects don’t teach you how to think. They teach you how to run code that already works.

It’s like practicing chess only by memorizing openings—you’ll lose the moment the game goes off-script.

The Power of Mentorship and Focus

In a world drowning in AI content, the rarest thing is clarity. And that’s where mentorship comes in.

A good mentor doesn’t flood you with more resources—they filter them.

They don’t give you shortcuts—they help you see the map.

Here’s what mentorship does that hundreds of tutorials can’t:

Focuses your effort: Instead of trying 10 projects, you go deep into one that builds real skills.

Identifies gaps: A mentor spots when you’ve misunderstood a concept—even if the code runs perfectly.

Builds thinking, not just doing: They push you to ask why, what if, and what else—not just how.

Prepares you for the real world: From model interpretability to ethical questions, mentors expose you to the bigger picture that toy tutorials ignore.

And most importantly, mentorship builds confidence rooted in understanding, not just repetition.

When you combine that with intentional focus—choosing to do fewer things, deeply and meaningfully—you stop being just another “data science enthusiast” and start becoming a true problem-solver.

AI and data science are not about how many projects you've cloned or how many courses you've started.

They're about how deeply you understand what you're doing.

You don’t need 10 courses, 30 projects, or 5 certifications. You need one project done well. One mentor who challenges you. One opportunity to think, not just code.

The goal isn’t to impress with a list. It’s to internalize what matters.

So next time you feel tempted to collect more tutorials, pause.

Ask yourself: Am I learning this to understand—or just to feel like I am?

Because in the end, depth beats distraction. Every time.