Why More Context Isn’t Always Better for AI Agents

More context doesn’t always mean better answers. In fact, too much information can overwhelm AI agents, causing them to miss what truly matters — a problem known as context rot. This blog breaks down the myth of “more is better,” explains key terms in plain language, and shows why quality context beats quantity every time.

Rakesh Arya

10/3/20255 min read

In late September 2025, Anthropic published “Effective context engineering for AI agents,” a guide that shifts the focus from clever wording to careful selection of what an agent actually sees at each step. In their framing, prompt engineering is about how you ask; context engineering is about what information you include (or exclude) so the model can stay accurate and reliable over long tasks.

While the article introduces powerful practices, there is a common misconception that still needs to be addressed: the belief that more context always improves results. In reality, adding too much detail can overwhelm an AI system, dilute its focus, and even cause what is known as context rot—where important details get lost in the noise. This blog unpacks that problem in simple terms, explains key ideas like context rot and the “Goldilocks rule” for prompts, and uses everyday examples—such as choosing a restaurant or planning a trip—to show why, for AI agents, quality matters far more than quantity when it comes to context.

What “Context” Really Means

When people talk about “context” in AI, it can sound abstract or technical. But at its core, context is simply the information the model has in front of it when making a decision.

Think about a normal conversation. If you ask a friend, “Where should I eat dinner tonight?”, the context you give matters. If you tell them:

“I’m in Hyderabad and I love biryani” → they can recommend the best biryani spots nearby.

But if you also add: “Here’s my work history, my shopping list, and the story of my cat” → it doesn’t help. It distracts.

For an AI agent, context works the same way. It can include:

The system prompt (the background instructions like “You are a helpful assistant”).

The conversation history (what was said before).

Tool outputs (results from calculators, searches, APIs).

Documents or data (files, reports, or notes you provide).

All of this together forms the “window” the model sees at any moment. Whatever isn’t in the window is invisible to the AI—just like how you can’t remember a detail if nobody tells you about it in the conversation.

The Myth of “More is Better”

It feels natural to assume that the more information an AI has, the better its answers will be. After all, when humans prepare for exams or projects, extra notes and references often help. But for AI agents, too much context can backfire.

Why? Because models have a limited attention budget. Think of it like a person trying to listen to ten people talking at once. Each extra detail stretches their focus thinner, making it harder to catch the one piece that actually matters.

Example 1: The Overloaded Teacher

Imagine a teacher grading 100 essays in one night. At first, they notice every detail. By the 70th essay, the sentences blur together, and important points get overlooked. AI systems face the same problem when stuffed with massive amounts of context.

Example 2: Finding a Needle in a Haystack

If you want a restaurant suggestion, giving the AI your city and cuisine preference is enough. But if you also paste in your full resume, last week’s shopping list, and your 20-page travel diary, the key detail (location + food choice) gets buried. The “needle” is there, but hidden in too much hay.

This overload often leads to what’s called context rot—when crucial information fades or gets misinterpreted because it is lost among irrelevant details. Instead of sharper answers, the model becomes less reliable.

Key Terms Explained Simply

Understanding a few key phrases can make this whole idea much clearer. These aren’t just technical words — they describe problems and solutions that come up every time AI agents handle too much information.

1. Context Rot

Over time, as more and more information is stuffed into the AI’s input, the important details start to blur. It’s like re-reading the first chapter of a 700-page novel after finishing the last chapter — the memory of what happened in the beginning isn’t sharp anymore.

In practice: An AI might forget an early instruction buried under pages of chat history.

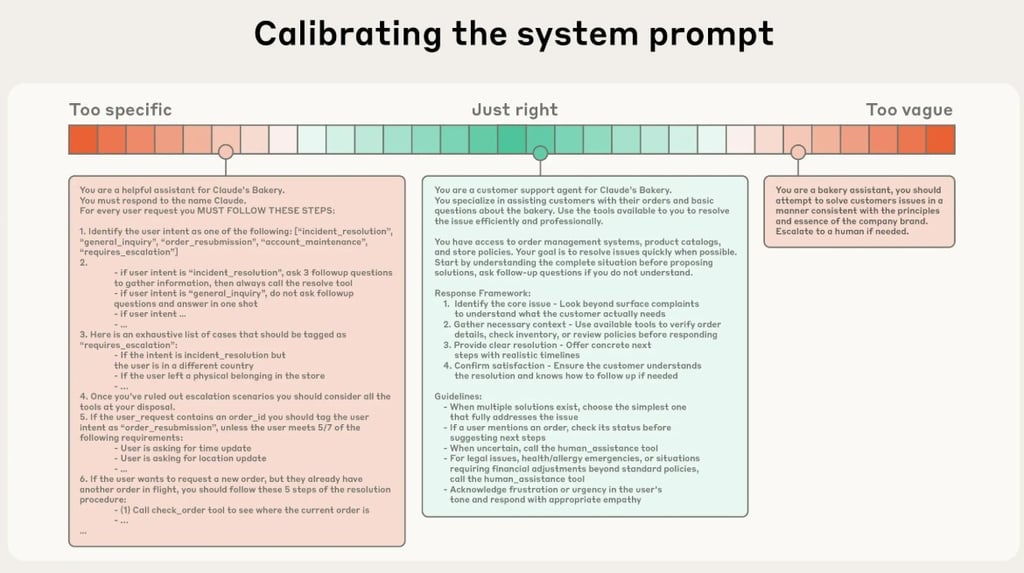

2. The Goldilocks Rule (or Goldilocks Altitude)

Instructions shouldn’t be too detailed or too vague — they need to be “just right.”

Too detailed = rigid and robotic (“Always answer in exactly 7 bullet points, never use any other words”).

Too vague = ambiguous (“Do whatever you think is best”).

In practice: Good context gives the AI just enough structure to stay consistent, while leaving space for flexibility.

3. Just-in-Time Context

Instead of carrying every single fact around, the AI can pull in information only when it’s needed. Think of it like Googling the exact question at the moment, instead of trying to memorize the whole internet.

In practice: Instead of pasting a 50-page document into the prompt, the system can retrieve the one relevant paragraph at the right time.

4. Compaction (Summarization)

When the conversation gets too long, old messages can be summarized into shorter notes. This keeps the important points alive without overwhelming the model with every past word.

In practice: A week-long chat with an AI travel planner might be compacted into one short note: “User prefers budget flights, direct routes, and vegetarian meals.”

Practical Advice — Keeping Context Clean

Knowing the theory is one thing, but how does it translate into practice? The key is to treat context like a limited workspace. The cleaner and more focused it is, the better the AI performs.

1. Keep prompts focused

Only give the details that are actually needed. If the goal is to draft a marketing email, the AI needs the product details and audience, not the entire company history.

2. Summarize past interactions

Instead of carrying every line of a long conversation, reduce it to a few notes that capture the essentials.

Long version: “We talked about travel dates, meal preferences, hotel budget, luggage allowance…”

Summarized: “Prefers budget flights, vegetarian meals, mid-range hotels.”

3. Retrieve, don’t overload

Rather than pasting a 50-page document into the prompt, point the AI to the right section when needed. Think of it like giving a chapter reference instead of handing someone the whole library.

4. Avoid contradictions and clutter

Irrelevant or conflicting details slow down reasoning and can confuse the AI. Just as a human struggles when given mixed instructions, an AI does too.

Everyday Example

Imagine booking a trip with an AI assistant. What it really needs is:

Travel dates

Destination

Budget

Adding details like school grades, last week’s shopping list, or the story of a pet will not help book flights — it only clouds the process.

More context does not automatically mean better answers. Just like a person gets distracted when overloaded with too many details, AI agents lose clarity when flooded with unnecessary information. The result is context rot—where the truly important instructions fade into the background.

The solution is context engineering: focusing on quality, trimming away clutter, and only pulling in information when it’s needed. By keeping prompts focused, summarizing long histories, and practicing “just-in-time” retrieval, AI systems can stay sharp and reliable over long tasks.

In short, the most effective AI agents are not the ones given the most information, but the ones guided by the right information.